- Access

- License

- Publication

- Contact

Access this app at https://syntex.sonicthings.org

MIT License

Copyright (c) 2025 Lonce Wyse

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

To cite this work or read more about the dataset, please use the following BibTeX entries:

@inproceedings{wyse2022syntex,

title={Syntex: parametric audio texture datasets for conditional training of instrumental interfaces.},

author={Wyse, Lonce and Ravikumar, Prashanth Thattai},

booktitle={NIME 2022},

year={2022},

organization={PubPub}

}

If you have questions, comments or suggestions about the Syntex dataset, please feel free to reach out Lonce Wyse

- Overview

- Demo

- Build-your-own

Overview

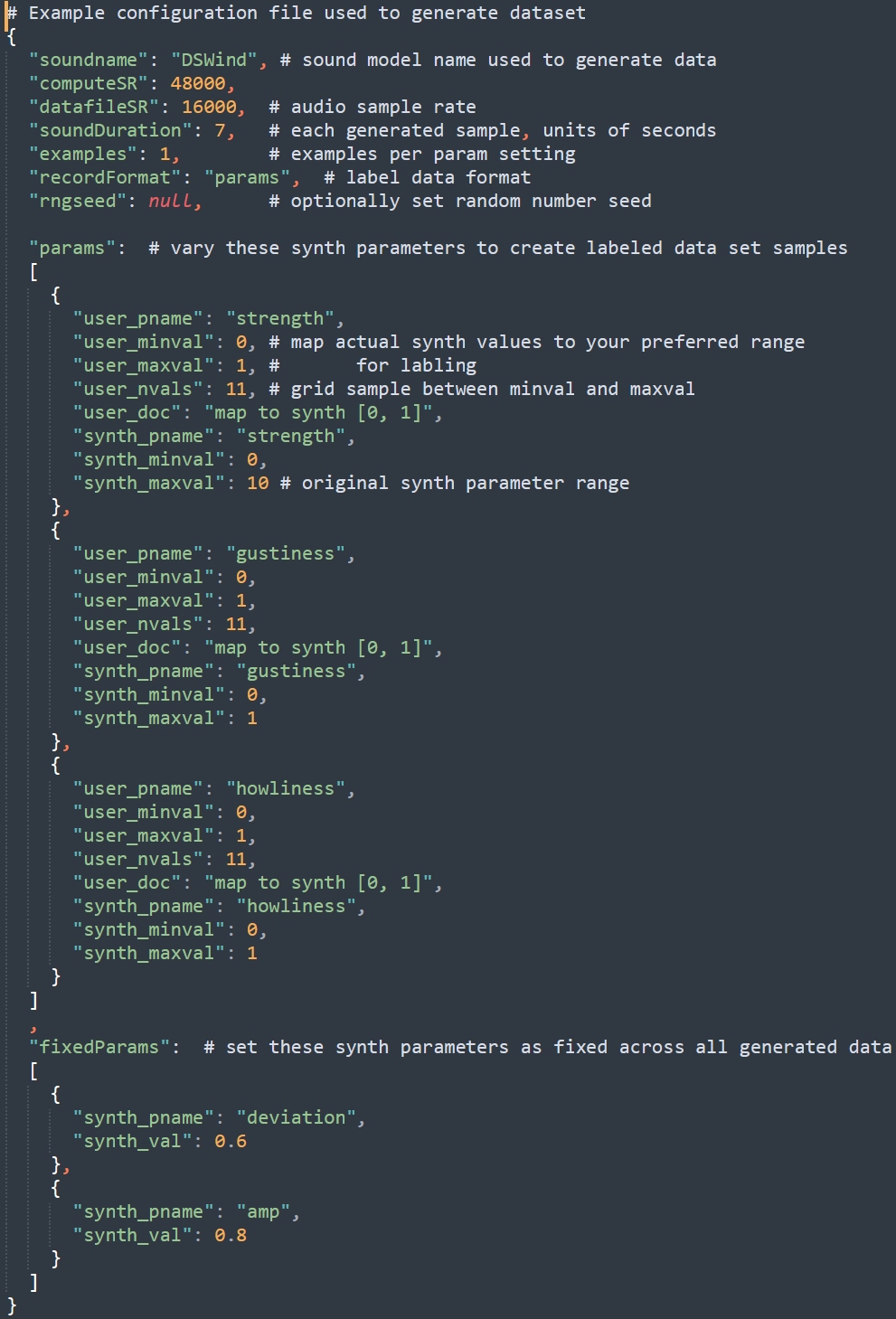

SynTex is an auto-generating dataset of sonic textures–sounds that have a stable statistical description for a given parametric configuration over a long enough window of time–that can be used to conditionally train neural networks on arbitrarily complex sounds. Audio and labels are generated on your local machine according to a configuation file.

Examples include rain, engines, machines, etc. SynTex datasets are generated locally according to an editable configuration file, creating audio and label files that can be used to conditionally train and test neural networks. The parametric description of a texture can vary (how hard rain is falling, how fast an engine is running). Parametric configurations (e.g. engine speed, piston irregularity) are grid sampled and used to generate labels for the audio dataset files. You can sample parameters and densely as you with, and generate as much audio as you need.

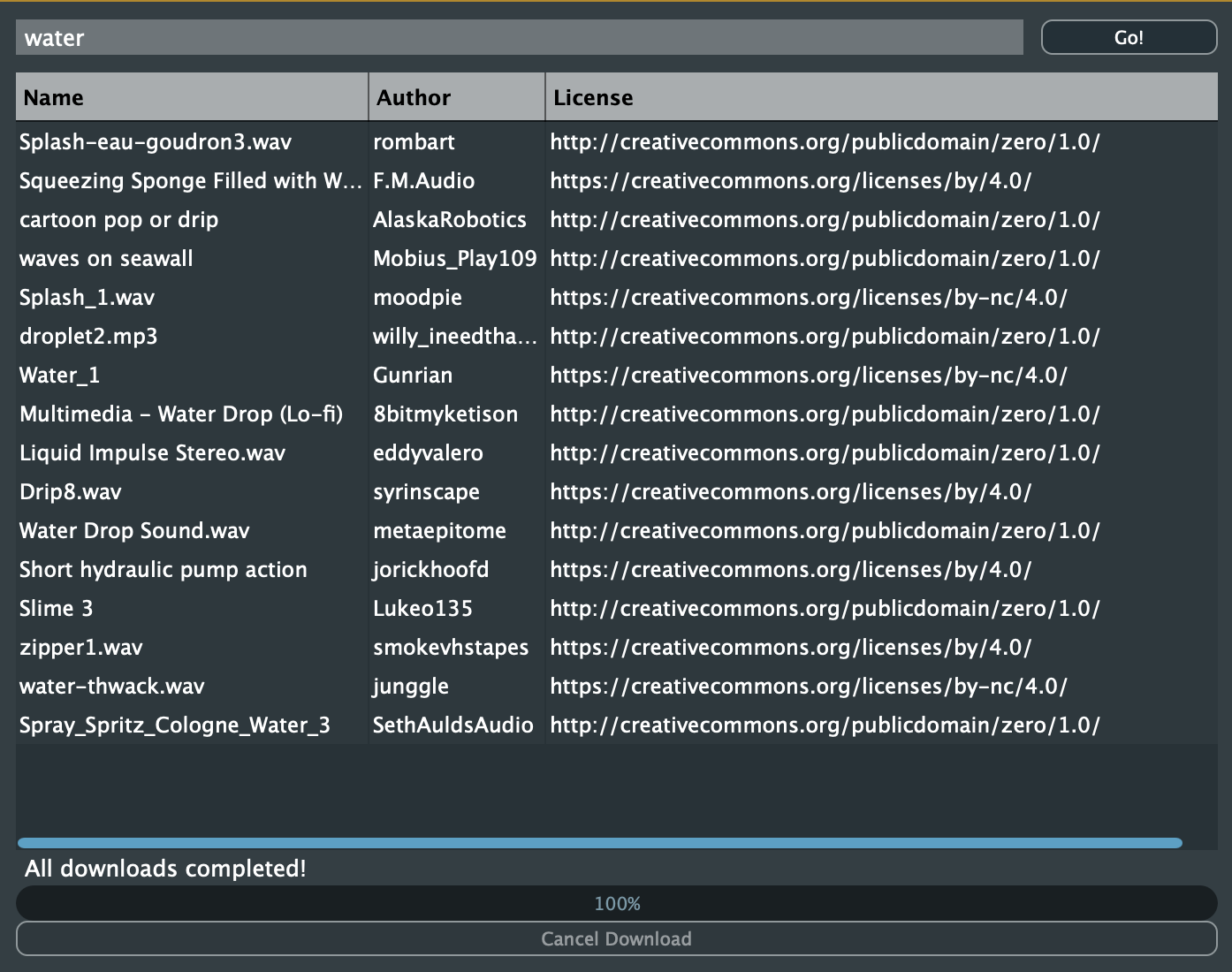

Demos

All the sound models in the collection are listed here: https://syntex.sonicthings.org/soundlist, and each has precomputed audio samples so that you can listen to examples before download.

Build your own app

There is a kind of python library for creating audio textures with a uniform API for including them in these audio-generated datasets. I haven’t made it public (documentation, etc), but if you are interested in using it, please contact Lonce Wyse (lonce.wyse@upf.edu).